In this post we look at an issue we had while implementing a specific form of data entry for our application. Basically there was an oversight in our reasoning about CRUD operations that needed fixing. It also shows the power of GUID’s.

The situation

We are currently in the final sprint towards delivering our first new product release to a group of customers. As one might expect, we are in the process of testing the functionality that was built so far and we ran into some issues. One issue we encountered was hidden deep down in the framework and hidden behind some other bug in the Silverlight UI.

To get a better understanding of the issue we found, it’s important to have some basic knowledge about how are framework works, especially in terms of creating and updating records. We decided early on that creating a record is basically the same as updating one. The only difference in our service call is that we provide an empty GUID instead of an existing GUID. The framework should then detect that there is a new record, create a GUID for it and insert it into the Entity Framework context and all works well (except for the incidental problem with insert).

Recently we added two modules to our UI, which consist of a data grid. The data grid is filled with a default set of rows from one table and once the user fills in certain values, records are created in another table. In order to make this work there is a view which is added to the entity framework to provide the correct rows. We then have some code in the service layer to make sure we don’t get double rows when there are records available in the second table.

The bug

A bug occurred when we tried to enter a value into a record that was not yet available in the second table, saved the modules data and then change that value. Now we ended up with two records in the second table instead of one. A short debugging session revealed the cause. Both update actions where send with an empty GUID, so both we’re interpreted as an insert.

The solution

Solving it was not as easy. In order to prevent these kind of bugs, we already return an updated (or new for that matter) business object from the service. However, for new records, there is no id known (it’s empty), so we have nothing to match to, meaning we can’t update the record in the data grid, which leads to our problem.

In order to fix it, we would want to create a new GUID in the Silverlight client, send it to the server and use it there as well, however then our service would not interpret this as an insert. What we can do is send this new GUID in the actual property of the business object which we want to update. This did require an update of the framework where, in case of an insert (the passed GUID is empty) we now check to see if the business object has a GUID as it’s key already available then we use that GUID instead of generating a new one.

So now, we can generate a GUID in the client, send it with the insert and know which object was updated as soon as it is received in the service call back. This allows us to match the rows in our data grid to the business object and update accordingly, meaning no more double records.

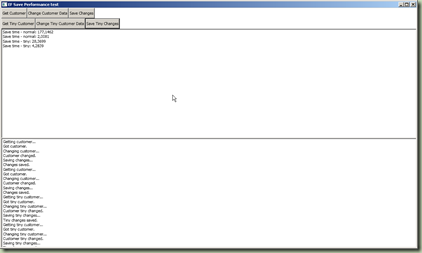

In the end, this his the code we created to make sure we get a GUID if one is available:

1: idPropertyName = string.Format("{0}{1}", idPropertyName, IdSuffix);

2: PropertyInfo idProperty = dataType.GetProperty(idPropertyName); 3: Guid id = Guid.NewGuid();4: if (idProperty != null)

5: {6: if (changedData.PropertyDictionary.ContainsKey(idPropertyName))

7: { 8: PropertyObject idPropertyObject = (PropertyObject)changedData.PropertyDictionary[idPropertyName]; 9: Guid requestedId = (Guid)idPropertyObject.CurrentValue;10: if (!requestedId.Equals(Guid.Empty))

11: { 12: id = requestedId; 13: } 14: }15: idProperty.SetValue(data, id, null);

16: }17: this.Id = id;

18: SetProperty(this.IdProperty, id);